Here we have list of digits representing prices of items in Nigeria Naira (₦) currency like this:-

money_naira = [2495, 93988, 39118, 19973, 39579, 35723, 80216, 56725, 16132, 82275, 18439, 34919, 17117, 85879, 51153, 7737, 35367, 9753, 86648, 87650, 58011, 2219, 1768, 8612, 2901, 5041, 3405, 8486, 7742, 5008, 7150, 5553, 9320, 2736, 9151, 9894, 2812, 6466, 1194, 4322, 6696, 6144, 6227, 2479, 3027, 4052, 7580, 1736, 9979, 1638, 2369, 8702, 1353, 9695, 4072, 4065, 7742, 7887, 7620]

But there is a problem! These number doesn't reads well by humans, it is just a numeric digit that can mean anything. So, we have to represent it in such a way that readers can tell they are actual money in Nigeria Naira (₦).

That is to say; 2495 will be presented as ₦2,495.00

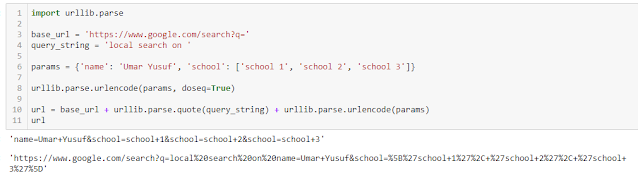

This can be achieved using python string formatting (

f-string or

format()).

f-string is the newest way to format string in python, it is available since Python 3.6. You read more from this

f-string tutorial.

Lets see how it done.

for money in money_naira:

# Using f-string

print(f"₦{money:,.2f}")

# Using format() function

print("₦{:,.2f}".format(money))The output is:

₦2,495.00, ₦93,988.00, ₦39,118.00, ₦19,973.00, ₦39,579.00, ₦35,723.00, ₦80,216.00, ₦56,725.00, ₦16,132.00, ₦82,275.00, ₦18,439.00, ₦34,919.00, ₦17,117.00, ₦85,879.00, ₦51,153.00, ₦7,737.00, ₦35,367.00, ₦9,753.00, ₦86,648.00, ₦87,650.00, ₦58,011.00, ₦2,219.00, ₦1,768.00, ₦8,612.00, ₦2,901.00, ₦5,041.00, ₦3,405.00, ₦8,486.00, ₦7,742.00, ₦5,008.00, ₦7,150.00, ₦5,553.00, ₦9,320.00, ₦2,736.00, ₦9,151.00, ₦9,894.00, ₦2,812.00, ₦6,466.00, ₦1,194.00, ₦4,322.00, ₦6,696.00, ₦6,144.00, ₦6,227.00, ₦2,479.00, ₦3,027.00, ₦4,052.00, ₦7,580.00, ₦1,736.00, ₦9,979.00, ₦1,638.00, ₦2,369.00, ₦8,702.00, ₦1,353.00, ₦9,695.00, ₦4,072.00, ₦4,065.00, ₦7,742.00, ₦7,887.00, ₦7,620.00

₦2,495.00, ₦93,988.00, ₦39,118.00, ₦19,973.00, ₦39,579.00, ₦35,723.00, ₦80,216.00, ₦56,725.00, ₦16,132.00, ₦82,275.00, ₦18,439.00, ₦34,919.00, ₦17,117.00, ₦85,879.00, ₦51,153.00, ₦7,737.00, ₦35,367.00, ₦9,753.00, ₦86,648.00, ₦87,650.00, ₦58,011.00, ₦2,219.00, ₦1,768.00, ₦8,612.00, ₦2,901.00, ₦5,041.00, ₦3,405.00, ₦8,486.00, ₦7,742.00, ₦5,008.00, ₦7,150.00, ₦5,553.00, ₦9,320.00, ₦2,736.00, ₦9,151.00, ₦9,894.00, ₦2,812.00, ₦6,466.00, ₦1,194.00, ₦4,322.00, ₦6,696.00, ₦6,144.00, ₦6,227.00, ₦2,479.00, ₦3,027.00, ₦4,052.00, ₦7,580.00, ₦1,736.00, ₦9,979.00, ₦1,638.00, ₦2,369.00, ₦8,702.00, ₦1,353.00, ₦9,695.00, ₦4,072.00, ₦4,065.00, ₦7,742.00, ₦7,887.00, ₦7,620.00

That is it!