One way to obtain latitude and longitude of an object on OpenStreetMap (OSM) given its node ID is via the OSM API.

In this post, we shall see how to use the OSM API to retrieve latitude and longitude from a given node ID. The API endpoint for doing this is: https://api.openstreetmap.org/api/0.6/node/{osm_id} which be default returns result in XML.

To return result in JSON, you need to append '.json' at the end of the url endpoint like so: https://api.openstreetmap.org/api/0.6/node/{osm_id}.json

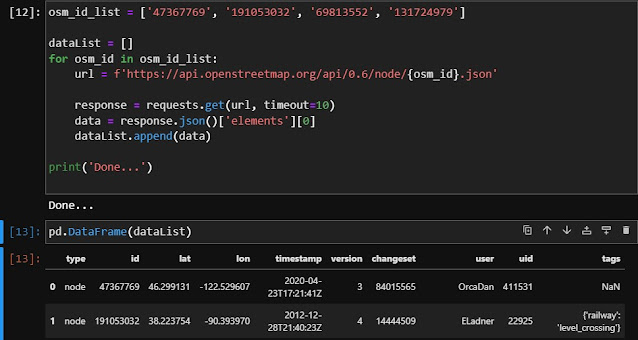

The script below shows how to retrieve latitude, longitude and other details from this ['47367769', '191053032', '69813552', '131724979'] list of OSM IDs.

import requests

import pandas as pd

osm_id_list = ['47367769', '191053032', '69813552', '131724979']

dataList = []

for osm_id in osm_id_list:

url = f'https://api.openstreetmap.org/api/0.6/node/{osm_id}.json'

response = requests.get(url, timeout=10)

data = response.json()['elements'][0]

dataList.append(data)

print('Done...')That is it!