A HTTP Archive file (shorten as 'HAR file'), is a JSON format used for tracking information between a web browser and a website. The common extension for these files is '.har'.

In python there is third party module called "Haralyzer" developed for getting useful stuff out of HAR files.

Since HAR files are in JSON formats, I will not use the "Haralyzer" module instead I will read the .har file and extract data from the text. Another reason I don't want to use the library is that I don't want to install new third party library on my machine most especially that the haralyzer module depends on another third library "six".

Other than that nothing wrong in using a library that reads the .har file directly.

Let's get our hands dirty...

How to get a HAR file

Practically, any website that uses JSON format as its data communication pipeline will generate a .har file on clients browser which can be accessed from the browser's developer tool.

Lets use this use this website on Earthquake data by USGS. Open the website and go to your browser developer tool, then select 'Network' tab >> XHR >> Expot HAR...

This will download a HAR files that contains JSON representation of the earthquake data as seen below...

You can save the file with any name in a location you can remember, we will use it in the next section. Note that the file is a GeoJSON with Padding.

Extracting data from .har file

Now that we have gotten a .har file, lets extract it content. As I already stated earlier, I will the "Haralyzer" module instead, I will make use of the JSON module which comes built-in with anaconda python setup.

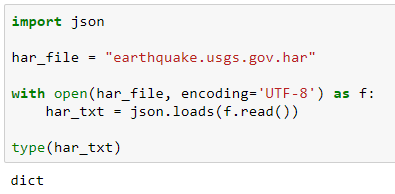

Read the .har file, use json.load() method to read its content into a dictionary object and check the type to be sure it is truly a dictionary.

import json

har_file = 'earthquake.usgs.gov.har'

with open(har_file) as f:

har_txt = json.loads(f.read())

type(har_txt)

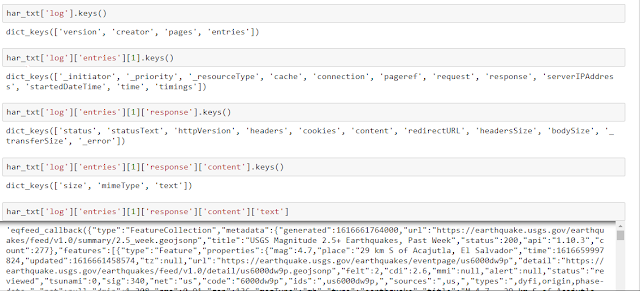

Since we now know that har_txt is a dictionary object, we can check its keys like so:-

Generally speaking, the required data can be reached using this pipe line:- log >> entries >> response >> content >> text

Since the original text is GeoJSON with Padding, we will remove the callback name ( eqfeed_callback(......); ) and parse the GeoJSON text as we wanted.

required_data.replace('eqfeed_callback(', '').replace(');', '')earthquake_data = required_data.replace('eqfeed_callback(', '').replace(');', '')

earthquake_data = json.loads(earthquake_data)

type(earthquake_data)dfq = pd.DataFrame(earthquake_data['features']) dfq['Longitude'] = [ i['coordinates'][0] for i in dfq['geometry'] ] dfq['Latitude'] = [ i['coordinates'][1] for i in dfq['geometry'] ] dfq['Place'] = [ mag['place'] for mag in dfq['properties'] ] dfq['Title'] = [ mag['title'] for mag in dfq['properties'] ] dfq['Manitude'] = [ mag['mag'] for mag in dfq['properties'] ] dfq['Time'] = [ mag['time'] for mag in dfq['properties'] ] dfq['Updated'] = [ mag['updated'] for mag in dfq['properties'] ] dfq['URL'] = [ mag['url'] for mag in dfq['properties'] ] dfq['Detail'] = [ mag['detail'] for mag in dfq['properties'] ] dfq

That is it!

No comments:

Post a Comment