The task at hand is to prepare a spreadsheet file with links to "Appraisal District Maps in Harris County, Texas".

There twenty two (22) appraisal districts as seen on the facet-maps page above. So, now we need to links to the detailed map in PDF format.

For example, if you open the Houston facet map, you see several facet numbers that leads to the detail maps.

https://public.hcad.org/maps/Houston.asp

If you click on any of the numbers (example 5156A), it should take you to a page that looks like the one below where you will see that the map is subdivided into 12 detailed maps in PDF format.

https://public.hcad.org/cgi-bin/IMap.asp?map=5156A

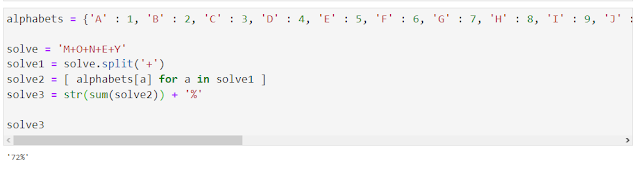

Scrapping the links was not easy as the website is had anti-scrape mechanisms implemented on it. So, we can manually collect the HTML source tags that contain what we wanted then use python scripting to wrangle it into the format we wanted.

For example for each district, the facet numbers are embedded in a '<map>...</map>' tag as seen below.

iMap = ''' <map name="ISDMap">

<area shape="rect" coords="487, 59 , 525, 86" href="/cgi-bin/IMap.asp?map=5262A" alt="">

<area shape="rect" coords="525, 59 , 564, 86" href="/cgi-bin/IMap.asp?map=5262B" alt="">

<area shape="rect" coords="564, 59 , 604, 85" href="/cgi-bin/IMap.asp?map=5362A" alt="">

<area shape="rect" coords="603, 59 , 643, 86" href="/cgi-bin/IMap.asp?map=5362B" alt="">

<area shape="rect" coords="643, 59 , 682, 85" href="/cgi-bin/IMap.asp?map=5462A" alt="">

<area shape="rect" coords="682, 59 , 722, 86" href="/cgi-bin/IMap.asp?map=5462B" alt="">

<area shape="rect" coords="487, 86 , 525,111" href="/cgi-bin/IMap.asp?map=5262C" alt="">

<area shape="rect" coords="524, 85 , 565,110" href="/cgi-bin/IMap.asp?map=5262D" alt="">

<area shape="rect" coords="564, 85 , 604,111" href="/cgi-bin/IMap.asp?map=5362C" alt="">

<area shape="rect" coords="603, 85 , 643,111" href="/cgi-bin/IMap.asp?map=5362D" alt="">

<area shape="rect" coords="643, 85 , 682,110" href="/cgi-bin/IMap.asp?map=5462C" alt="">

<area shape="rect" coords="681, 85 , 722,111" href="/cgi-bin/IMap.asp?map=5462D" alt="">

<area shape="rect" coords="486,110 , 525,137" href="/cgi-bin/IMap.asp?map=5261A" alt="">

<area shape="rect" coords="525,110 , 565,137" href="/cgi-bin/IMap.asp?map=5261B" alt="">

<area shape="rect" coords="564,110 , 604,137" href="/cgi-bin/IMap.asp?map=5361A" alt="">

<area shape="rect" coords="603,110 , 643,137" href="/cgi-bin/IMap.asp?map=5261B" alt="">

<area shape="rect" coords="643,110 , 682,137" href="/cgi-bin/IMap.asp?map=5461A" alt="">

<area shape="rect" coords="681,110 , 722,137" href="/cgi-bin/IMap.asp?map=5461B" alt="">

<area shape="rect" coords="368,137 , 409,163" href="/cgi-bin/IMap.asp?map=5061D" alt="">

<area shape="rect" coords="408,137 , 447,163" href="/cgi-bin/IMap.asp?map=5161C" alt="">

<area shape="rect" coords="446,136 , 487,162" href="/cgi-bin/IMap.asp?map=5161D" alt="">

<area shape="rect" coords="486,137 , 525,163" href="/cgi-bin/IMap.asp?map=5261C" alt="">

<area shape="rect" coords="524,137 , 565,163" href="/cgi-bin/IMap.asp?map=5261D" alt="">

<area shape="rect" coords="564,137 , 604,162" href="/cgi-bin/IMap.asp?map=5361C" alt="">

<area shape="rect" coords="603,137 , 643,163" href="/cgi-bin/IMap.asp?map=5361D" alt="">

<area shape="rect" coords="642,137 , 682,163" href="/cgi-bin/IMap.asp?map=5461C" alt="">

<area shape="rect" coords="682,136 , 722,162" href="/cgi-bin/IMap.asp?map=5461D" alt="">

<area shape="rect" coords="721,137 , 761,163" href="/cgi-bin/IMap.asp?map=5561C" alt="">

<area shape="rect" coords="760,137 , 800,163" href="/cgi-bin/IMap.asp?map=5561D" alt="">

<area shape="rect" coords="368,163 , 408,188" href="/cgi-bin/IMap.asp?map=5060B" alt="">

<area shape="rect" coords="407,163 , 447,188" href="/cgi-bin/IMap.asp?map=5160A" alt="">

<area shape="rect" coords="446,162 , 487,189" href="/cgi-bin/IMap.asp?map=5160B" alt="">

<area shape="rect" coords="486,163 , 525,188" href="/cgi-bin/IMap.asp?map=5260A" alt="">

<area shape="rect" coords="525,163 , 565,189" href="/cgi-bin/IMap.asp?map=5260B" alt="">

<area shape="rect" coords="564,162 , 604,188" href="/cgi-bin/IMap.asp?map=5360A" alt="">

<area shape="rect" coords="603,163 , 644,189" href="/cgi-bin/IMap.asp?map=5360B" alt="">

<area shape="rect" coords="642,163 , 682,189" href="/cgi-bin/IMap.asp?map=5460A" alt="">

<area shape="rect" coords="682,163 , 722,189" href="/cgi-bin/IMap.asp?map=5460B" alt="">

<area shape="rect" coords="721,162 , 760,188" href="/cgi-bin/IMap.asp?map=5560A" alt="">

<area shape="rect" coords="760,163 , 800,189" href="/cgi-bin/IMap.asp?map=5560B" alt="">

<area shape="rect" coords="368,189 , 408,214" href="/cgi-bin/IMap.asp?map=5060D" alt="">

<area shape="rect" coords="407,189 , 447,214" href="/cgi-bin/IMap.asp?map=5160C" alt="">

<area shape="rect" coords="446,188 , 487,214" href="/cgi-bin/IMap.asp?map=5160D" alt="">

<area shape="rect" coords="486,189 , 525,214" href="/cgi-bin/IMap.asp?map=5260C" alt="">

<area shape="rect" coords="524,189 , 565,214" href="/cgi-bin/IMap.asp?map=5260D" alt="">

<area shape="rect" coords="564,189 , 604,214" href="/cgi-bin/IMap.asp?map=5360C" alt="">

<area shape="rect" coords="603,189 , 643,214" href="/cgi-bin/IMap.asp?map=5360D" alt="">

<area shape="rect" coords="642,189 , 682,214" href="/cgi-bin/IMap.asp?map=5460C" alt="">

<area shape="rect" coords="681,189 , 722,214" href="/cgi-bin/IMap.asp?map=5460D" alt="">

<area shape="rect" coords="721,189 , 761,214" href="/cgi-bin/IMap.asp?map=5560C" alt="">

<area shape="rect" coords="761,188 , 800,214" href="/cgi-bin/IMap.asp?map=5560D" alt="">

<area shape="rect" coords="838,189 , 879,214" href="/cgi-bin/IMap.asp?map=5660D" alt="">

<area shape="rect" coords="877,189 , 917,214" href="/cgi-bin/IMap.asp?map=5760C" alt="">

<area shape="rect" coords="408,214 , 447,240" href="/cgi-bin/IMap.asp?map=5159A" alt="">

<area shape="rect" coords="446,214 , 487,240" href="/cgi-bin/IMap.asp?map=5159B" alt="">

<area shape="rect" coords="486,214 , 525,240" href="/cgi-bin/IMap.asp?map=5259A" alt="">

<area shape="rect" coords="524,214 , 565,240" href="/cgi-bin/IMap.asp?map=5259B" alt="">

<area shape="rect" coords="565,214 , 604,240" href="/cgi-bin/IMap.asp?map=5359A" alt="">

<area shape="rect" coords="604,214 , 643,239" href="/cgi-bin/IMap.asp?map=5359B" alt="">

<area shape="rect" coords="643,213 , 682,240" href="/cgi-bin/IMap.asp?map=5459A" alt="">

<area shape="rect" coords="682,214 , 722,240" href="/cgi-bin/IMap.asp?map=5459B" alt="">

<area shape="rect" coords="722,214 , 761,240" href="/cgi-bin/IMap.asp?map=5559A" alt="">

<area shape="rect" coords="761,214 , 800,240" href="/cgi-bin/IMap.asp?map=5559B" alt="">

<area shape="rect" coords="800,214 , 839,240" href="/cgi-bin/IMap.asp?map=5659A" alt="">

<area shape="rect" coords="839,214 , 878,240" href="/cgi-bin/IMap.asp?map=5659B" alt="">

<area shape="rect" coords="878,214 , 917,240" href="/cgi-bin/IMap.asp?map=5759A" alt="">

<area shape="rect" coords="917,213 , 957,240" href="/cgi-bin/IMap.asp?map=5759B" alt="">

<area shape="rect" coords="447,240 , 487,266" href="/cgi-bin/IMap.asp?map=5159D" alt="">

<area shape="rect" coords="487,240 , 525,266" href="/cgi-bin/IMap.asp?map=5259C" alt="">

<area shape="rect" coords="525,239 , 565,266" href="/cgi-bin/IMap.asp?map=5259D" alt="">

<area shape="rect" coords="565,240 , 604,266" href="/cgi-bin/IMap.asp?map=5359C" alt="">

<area shape="rect" coords="604,239 , 643,266" href="/cgi-bin/IMap.asp?map=5359D" alt="">

<area shape="rect" coords="643,240 , 682,266" href="/cgi-bin/IMap.asp?map=5459C" alt="">

<area shape="rect" coords="682,240 , 722,266" href="/cgi-bin/IMap.asp?map=5459D" alt="">

<area shape="rect" coords="721,240 , 761,266" href="/cgi-bin/IMap.asp?map=5559C" alt="">

<area shape="rect" coords="760,240 , 800,265" href="/cgi-bin/IMap.asp?map=5559D" alt="">

<area shape="rect" coords="800,240 , 839,266" href="/cgi-bin/IMap.asp?map=5659C" alt="">

<area shape="rect" coords="840,240 , 879,266" href="/cgi-bin/IMap.asp?map=5659D" alt="">

<area shape="rect" coords="878,240 , 917,266" href="/cgi-bin/IMap.asp?map=5759C" alt="">

<area shape="rect" coords="917,240 , 957,266" href="/cgi-bin/IMap.asp?map=5759D" alt="">

<area shape="rect" coords="448,266 , 487,292" href="/cgi-bin/IMap.asp?map=5158B" alt="">

<area shape="rect" coords="487,266 , 525,292" href="/cgi-bin/IMap.asp?map=5258A" alt="">

<area shape="rect" coords="525,265 , 565,292" href="/cgi-bin/IMap.asp?map=5258B" alt="">

<area shape="rect" coords="565,266 , 604,292" href="/cgi-bin/IMap.asp?map=5358A" alt="">

<area shape="rect" coords="604,266 , 643,292" href="/cgi-bin/IMap.asp?map=5358B" alt="">

<area shape="rect" coords="643,266 , 682,292" href="/cgi-bin/IMap.asp?map=5458A" alt="">

<area shape="rect" coords="682,265 , 722,292" href="/cgi-bin/IMap.asp?map=5458B" alt="">

<area shape="rect" coords="722,266 , 761,292" href="/cgi-bin/IMap.asp?map=5558A" alt="">

<area shape="rect" coords="761,266 , 800,292" href="/cgi-bin/IMap.asp?map=5558B" alt="">

<area shape="rect" coords="800,266 , 839,292" href="/cgi-bin/IMap.asp?map=5658A" alt="">

<area shape="rect" coords="839,266 , 878,292" href="/cgi-bin/IMap.asp?map=5658B" alt="">

<area shape="rect" coords="877,266 , 917,292" href="/cgi-bin/IMap.asp?map=5758A" alt="">

<area shape="rect" coords="916,265 , 957,292" href="/cgi-bin/IMap.asp?map=5758B" alt="">

<area shape="rect" coords="957,266 , 996,292" href="/cgi-bin/IMap.asp?map=5858A" alt="">

<area shape="rect" coords="408,291 , 447,317" href="/cgi-bin/IMap.asp?map=5158C" alt="">

<area shape="rect" coords="447,292 , 487,317" href="/cgi-bin/IMap.asp?map=5158D" alt="">

<area shape="rect" coords="487,292 , 525,317" href="/cgi-bin/IMap.asp?map=5258C" alt="">

<area shape="rect" coords="525,292 , 565,317" href="/cgi-bin/IMap.asp?map=5258D" alt="">

<area shape="rect" coords="565,292 , 604,317" href="/cgi-bin/IMap.asp?map=5358C" alt="">

<area shape="rect" coords="604,292 , 643,317" href="/cgi-bin/IMap.asp?map=5358D" alt="">

<area shape="rect" coords="643,291 , 682,317" href="/cgi-bin/IMap.asp?map=5458C" alt="">

<area shape="rect" coords="682,292 , 722,317" href="/cgi-bin/IMap.asp?map=5458D" alt="">

<area shape="rect" coords="722,292 , 761,317" href="/cgi-bin/IMap.asp?map=5558C" alt="">

<area shape="rect" coords="761,292 , 800,317" href="/cgi-bin/IMap.asp?map=5558D" alt="">

<area shape="rect" coords="800,292 , 839,317" href="/cgi-bin/IMap.asp?map=5658C" alt="">

<area shape="rect" coords="839,292 , 878,317" href="/cgi-bin/IMap.asp?map=5658D" alt="">

<area shape="rect" coords="878,292 , 917,317" href="/cgi-bin/IMap.asp?map=5758C" alt="">

<area shape="rect" coords="917,292 , 957,317" href="/cgi-bin/IMap.asp?map=5758D" alt="">

<area shape="rect" coords="957,291 , 996,317" href="/cgi-bin/IMap.asp?map=5858C" alt="">

<area shape="rect" coords=" 94,317 , 133,343" href="/cgi-bin/IMap.asp?map=4757A" alt="">

<area shape="rect" coords="132,317 , 173,343" href="/cgi-bin/IMap.asp?map=4757B" alt="">

<area shape="rect" coords="211,317 , 252,343" href="/cgi-bin/IMap.asp?map=4857B" alt="">

<area shape="rect" coords="250,317 , 290,342" href="/cgi-bin/IMap.asp?map=4957A" alt="">

<area shape="rect" coords="369,316 , 408,343" href="/cgi-bin/IMap.asp?map=5057B" alt="">

<area shape="rect" coords="408,317 , 447,343" href="/cgi-bin/IMap.asp?map=5157A" alt="">

<area shape="rect" coords="447,317 , 487,343" href="/cgi-bin/IMap.asp?map=5157B" alt="">

<area shape="rect" coords="486,316 , 525,343" href="/cgi-bin/IMap.asp?map=5257A" alt="">

<area shape="rect" coords="525,317 , 566,343" href="/cgi-bin/IMap.asp?map=5257B" alt="">

<area shape="rect" coords="565,317 , 605,343" href="/cgi-bin/IMap.asp?map=5357A" alt="">

<area shape="rect" coords="605,317 , 644,343" href="/cgi-bin/IMap.asp?map=5357B" alt="">

<area shape="rect" coords="643,316 , 683,343" href="/cgi-bin/IMap.asp?map=5457A" alt="">

<area shape="rect" coords="683,317 , 723,343" href="/cgi-bin/IMap.asp?map=5457B" alt="">

<area shape="rect" coords="722,317 , 761,343" href="/cgi-bin/IMap.asp?map=5557A" alt="">

<area shape="rect" coords="761,317 , 801,343" href="/cgi-bin/IMap.asp?map=5557B" alt="">

<area shape="rect" coords="801,317 , 839,343" href="/cgi-bin/IMap.asp?map=5657A" alt="">

<area shape="rect" coords="839,316 , 879,343" href="/cgi-bin/IMap.asp?map=5657B" alt="">

<area shape="rect" coords="370,343 , 409,369" href="/cgi-bin/IMap.asp?map=5057D" alt="">

<area shape="rect" coords="408,343 , 448,369" href="/cgi-bin/IMap.asp?map=5157C" alt="">

<area shape="rect" coords="448,343 , 488,369" href="/cgi-bin/IMap.asp?map=5157D" alt="">

<area shape="rect" coords="488,343 , 526,369" href="/cgi-bin/IMap.asp?map=5257C" alt="">

<area shape="rect" coords="526,343 , 566,369" href="/cgi-bin/IMap.asp?map=5257D" alt="">

<area shape="rect" coords="566,343 , 605,369" href="/cgi-bin/IMap.asp?map=5357C" alt="">

<area shape="rect" coords="605,342 , 644,368" href="/cgi-bin/IMap.asp?map=5357D" alt="">

<area shape="rect" coords="644,343 , 683,369" href="/cgi-bin/IMap.asp?map=5457C" alt="">

<area shape="rect" coords="683,343 , 723,369" href="/cgi-bin/IMap.asp?map=5457D" alt="">

<area shape="rect" coords="723,343 , 761,369" href="/cgi-bin/IMap.asp?map=5557C" alt="">

<area shape="rect" coords="761,343 , 801,369" href="/cgi-bin/IMap.asp?map=5557D" alt="">

<area shape="rect" coords="801,343 , 840,369" href="/cgi-bin/IMap.asp?map=5657C" alt="">

<area shape="rect" coords="840,342 , 879,369" href="/cgi-bin/IMap.asp?map=5657D" alt="">

<area shape="rect" coords=" 94,343 , 134,369" href="/cgi-bin/IMap.asp?map=4757C" alt="">

<area shape="rect" coords="134,342 , 174,369" href="/cgi-bin/IMap.asp?map=4757D" alt="">

<area shape="rect" coords="173,343 , 213,369" href="/cgi-bin/IMap.asp?map=4857C" alt="">

<area shape="rect" coords="211,343 , 251,368" href="/cgi-bin/IMap.asp?map=4857D" alt="">

<area shape="rect" coords="251,343 , 291,369" href="/cgi-bin/IMap.asp?map=4957C" alt="">

<area shape="rect" coords="291,342 , 330,369" href="/cgi-bin/IMap.asp?map=4957D" alt="">

<area shape="rect" coords=" 94,368 , 134,394" href="/cgi-bin/IMap.asp?map=4756A" alt="">

<area shape="rect" coords="134,369 , 173,394" href="/cgi-bin/IMap.asp?map=4756B" alt="">

<area shape="rect" coords="172,368 , 212,394" href="/cgi-bin/IMap.asp?map=4856A" alt="">

<area shape="rect" coords="212,369 , 252,394" href="/cgi-bin/IMap.asp?map=4856B" alt="">

<area shape="rect" coords="251,368 , 291,394" href="/cgi-bin/IMap.asp?map=4956A" alt="">

<area shape="rect" coords="290,368 , 330,394" href="/cgi-bin/IMap.asp?map=4956B" alt="">

<area shape="rect" coords="329,369 , 369,394" href="/cgi-bin/IMap.asp?map=5056A" alt="">

<area shape="rect" coords="370,368 , 409,394" href="/cgi-bin/IMap.asp?map=5056B" alt="">

<area shape="rect" coords="409,369 , 448,394" href="/cgi-bin/IMap.asp?map=5156A" alt="">

<area shape="rect" coords="447,369 , 488,394" href="/cgi-bin/IMap.asp?map=5156B" alt="">

<area shape="rect" coords="487,369 , 526,394" href="/cgi-bin/IMap.asp?map=5256A" alt="">

<area shape="rect" coords="526,369 , 566,394" href="/cgi-bin/IMap.asp?map=5256B" alt="">

<area shape="rect" coords="566,369 , 605,394" href="/cgi-bin/IMap.asp?map=5356A" alt="">

<area shape="rect" coords="604,368 , 643,393" href="/cgi-bin/IMap.asp?map=5356B" alt="">

<area shape="rect" coords="644,369 , 683,394" href="/cgi-bin/IMap.asp?map=5456A" alt="">

<area shape="rect" coords="683,369 , 723,394" href="/cgi-bin/IMap.asp?map=5456B" alt="">

<area shape="rect" coords="723,369 , 762,394" href="/cgi-bin/IMap.asp?map=5556A" alt="">

<area shape="rect" coords="761,369 , 801,394" href="/cgi-bin/IMap.asp?map=5556B" alt="">

<area shape="rect" coords="801,369 , 840,394" href="/cgi-bin/IMap.asp?map=5656A" alt="">

<area shape="rect" coords="840,369 , 879,394" href="/cgi-bin/IMap.asp?map=5656B" alt="">

<area shape="rect" coords=" 95,394 , 134,419" href="/cgi-bin/IMap.asp?map=4756C" alt="">

<area shape="rect" coords="134,393 , 173,419" href="/cgi-bin/IMap.asp?map=4756D" alt="">

<area shape="rect" coords="173,393 , 213,419" href="/cgi-bin/IMap.asp?map=4856C" alt="">

<area shape="rect" coords="212,393 , 252,419" href="/cgi-bin/IMap.asp?map=4856D" alt="">

<area shape="rect" coords="251,394 , 290,419" href="/cgi-bin/IMap.asp?map=4956C" alt="">

<area shape="rect" coords="289,393 , 329,419" href="/cgi-bin/IMap.asp?map=4956D" alt="">

<area shape="rect" coords="330,393 , 370,419" href="/cgi-bin/IMap.asp?map=5056C" alt="">

<area shape="rect" coords="369,393 , 408,420" href="/cgi-bin/IMap.asp?map=5056D" alt="">

<area shape="rect" coords="409,394 , 447,420" href="/cgi-bin/IMap.asp?map=5156C" alt="">

<area shape="rect" coords="448,395 , 487,420" href="/cgi-bin/IMap.asp?map=5156D" alt="">

<area shape="rect" coords="487,394 , 525,420" href="/cgi-bin/IMap.asp?map=5256C" alt="">

<area shape="rect" coords="525,394 , 565,420" href="/cgi-bin/IMap.asp?map=5256D" alt="">

<area shape="rect" coords="565,394 , 604,419" href="/cgi-bin/IMap.asp?map=5356C" alt="">

<area shape="rect" coords="605,394 , 644,420" href="/cgi-bin/IMap.asp?map=5356D" alt="">

<area shape="rect" coords="643,394 , 682,420" href="/cgi-bin/IMap.asp?map=5456C" alt="">

<area shape="rect" coords="682,394 , 723,420" href="/cgi-bin/IMap.asp?map=5456D" alt="">

<area shape="rect" coords="722,394 , 761,420" href="/cgi-bin/IMap.asp?map=5556C" alt="">

<area shape="rect" coords="762,394 , 801,420" href="/cgi-bin/IMap.asp?map=5556D" alt="">

<area shape="rect" coords="801,393 , 839,420" href="/cgi-bin/IMap.asp?map=5656C" alt="">

<area shape="rect" coords="839,394 , 879,420" href="/cgi-bin/IMap.asp?map=5656D" alt="">

<area shape="rect" coords="878,393 , 918,419" href="/cgi-bin/IMap.asp?map=5756C" alt="">

<area shape="rect" coords="291,419 , 330,446" href="/cgi-bin/IMap.asp?map=4955B" alt="">

<area shape="rect" coords="329,419 , 369,445" href="/cgi-bin/IMap.asp?map=5055A" alt="">

<area shape="rect" coords="370,420 , 408,445" href="/cgi-bin/IMap.asp?map=5055B" alt="">

<area shape="rect" coords="408,420 , 447,446" href="/cgi-bin/IMap.asp?map=5155A" alt="">

<area shape="rect" coords="447,419 , 487,446" href="/cgi-bin/IMap.asp?map=5155B" alt="">

<area shape="rect" coords="487,420 , 526,446" href="/cgi-bin/IMap.asp?map=5255A" alt="">

<area shape="rect" coords="526,419 , 566,445" href="/cgi-bin/IMap.asp?map=5255B" alt="">

<area shape="rect" coords="565,420 , 605,446" href="/cgi-bin/IMap.asp?map=5355A" alt="">

<area shape="rect" coords="604,419 , 643,446" href="/cgi-bin/IMap.asp?map=5355B" alt="">

<area shape="rect" coords="644,420 , 683,446" href="/cgi-bin/IMap.asp?map=5455A" alt="">

<area shape="rect" coords="682,420 , 723,446" href="/cgi-bin/IMap.asp?map=5455B" alt="">

<area shape="rect" coords="723,419 , 762,445" href="/cgi-bin/IMap.asp?map=5555A" alt="">

<area shape="rect" coords="761,420 , 801,446" href="/cgi-bin/IMap.asp?map=5555B" alt="">

<area shape="rect" coords="801,420 , 839,446" href="/cgi-bin/IMap.asp?map=5655A" alt="">

<area shape="rect" coords="839,420 , 879,446" href="/cgi-bin/IMap.asp?map=5655B" alt="">

<area shape="rect" coords="879,420 , 918,446" href="/cgi-bin/IMap.asp?map=5755A" alt="">

<area shape="rect" coords="290,446 , 329,472" href="/cgi-bin/IMap.asp?map=4955D" alt="">

<area shape="rect" coords="329,445 , 370,472" href="/cgi-bin/IMap.asp?map=5055C" alt="">

<area shape="rect" coords="369,445 , 409,472" href="/cgi-bin/IMap.asp?map=5055D" alt="">

<area shape="rect" coords="408,445 , 448,472" href="/cgi-bin/IMap.asp?map=5155C" alt="">

<area shape="rect" coords="447,446 , 488,472" href="/cgi-bin/IMap.asp?map=5155D" alt="">

<area shape="rect" coords="487,446 , 526,472" href="/cgi-bin/IMap.asp?map=5255C" alt="">

<area shape="rect" coords="525,445 , 566,472" href="/cgi-bin/IMap.asp?map=5255D" alt="">

<area shape="rect" coords="565,446 , 605,472" href="/cgi-bin/IMap.asp?map=5355C" alt="">

<area shape="rect" coords="605,446 , 644,472" href="/cgi-bin/IMap.asp?map=5355D" alt="">

<area shape="rect" coords="643,445 , 683,471" href="/cgi-bin/IMap.asp?map=5455C" alt="">

<area shape="rect" coords="683,446 , 723,472" href="/cgi-bin/IMap.asp?map=5455D" alt="">

<area shape="rect" coords="723,445 , 762,472" href="/cgi-bin/IMap.asp?map=5555C" alt="">

<area shape="rect" coords="762,446 , 801,472" href="/cgi-bin/IMap.asp?map=5555D" alt="">

<area shape="rect" coords="800,446 , 840,472" href="/cgi-bin/IMap.asp?map=5655C" alt="">

<area shape="rect" coords="840,446 , 878,472" href="/cgi-bin/IMap.asp?map=5655D" alt="">

<area shape="rect" coords="878,446 , 917,472" href="/cgi-bin/IMap.asp?map=5755C" alt="">

<area shape="rect" coords="291,472 , 330,497" href="/cgi-bin/IMap.asp?map=4954B" alt="">

<area shape="rect" coords="329,472 , 370,497" href="/cgi-bin/IMap.asp?map=5054A" alt="">

<area shape="rect" coords="369,472 , 408,497" href="/cgi-bin/IMap.asp?map=5054B" alt="">

<area shape="rect" coords="409,472 , 448,497" href="/cgi-bin/IMap.asp?map=5154A" alt="">

<area shape="rect" coords="448,472 , 488,497" href="/cgi-bin/IMap.asp?map=5154B" alt="">

<area shape="rect" coords="487,472 , 525,497" href="/cgi-bin/IMap.asp?map=5254A" alt="">

<area shape="rect" coords="525,472 , 566,497" href="/cgi-bin/IMap.asp?map=5254B" alt="">

<area shape="rect" coords="566,472 , 605,497" href="/cgi-bin/IMap.asp?map=5354A" alt="">

<area shape="rect" coords="605,472 , 644,497" href="/cgi-bin/IMap.asp?map=5354B" alt="">

<area shape="rect" coords="644,472 , 683,497" href="/cgi-bin/IMap.asp?map=5454A" alt="">

<area shape="rect" coords="682,472 , 723,497" href="/cgi-bin/IMap.asp?map=5454B" alt="">

<area shape="rect" coords="722,471 , 762,497" href="/cgi-bin/IMap.asp?map=5554A" alt="">

<area shape="rect" coords="761,472 , 801,497" href="/cgi-bin/IMap.asp?map=5554B" alt="">

<area shape="rect" coords="800,471 , 840,497" href="/cgi-bin/IMap.asp?map=5654A" alt="">

<area shape="rect" coords="840,472 , 879,497" href="/cgi-bin/IMap.asp?map=5654B" alt="">

<area shape="rect" coords="879,472 , 917,497" href="/cgi-bin/IMap.asp?map=5754A" alt="">

<area shape="rect" coords="290,497 , 330,523" href="/cgi-bin/IMap.asp?map=4954D" alt="">

<area shape="rect" coords="329,496 , 370,523" href="/cgi-bin/IMap.asp?map=5054C" alt="">

<area shape="rect" coords="369,497 , 409,523" href="/cgi-bin/IMap.asp?map=5054D" alt="">

<area shape="rect" coords="409,496 , 448,523" href="/cgi-bin/IMap.asp?map=5154C" alt="">

<area shape="rect" coords="448,497 , 488,523" href="/cgi-bin/IMap.asp?map=5154D" alt="">

<area shape="rect" coords="488,498 , 525,523" href="/cgi-bin/IMap.asp?map=5254C" alt="">

<area shape="rect" coords="525,497 , 565,522" href="/cgi-bin/IMap.asp?map=5254D" alt="">

<area shape="rect" coords="565,496 , 604,523" href="/cgi-bin/IMap.asp?map=5354C" alt="">

<area shape="rect" coords="604,497 , 644,523" href="/cgi-bin/IMap.asp?map=5354D" alt="">

<area shape="rect" coords="644,497 , 682,522" href="/cgi-bin/IMap.asp?map=5454C" alt="">

<area shape="rect" coords="682,497 , 723,522" href="/cgi-bin/IMap.asp?map=5454D" alt="">

<area shape="rect" coords="723,497 , 762,523" href="/cgi-bin/IMap.asp?map=5554C" alt="">

<area shape="rect" coords="762,497 , 800,523" href="/cgi-bin/IMap.asp?map=5554D" alt="">

<area shape="rect" coords="801,497 , 840,523" href="/cgi-bin/IMap.asp?map=5654C" alt="">

<area shape="rect" coords="839,497 , 879,523" href="/cgi-bin/IMap.asp?map=5654D" alt="">

<area shape="rect" coords="878,497 , 918,523" href="/cgi-bin/IMap.asp?map=5754C" alt="">

<area shape="rect" coords="290,523 , 330,549" href="/cgi-bin/IMap.asp?map=4953B" alt="">

<area shape="rect" coords="329,523 , 370,549" href="/cgi-bin/IMap.asp?map=5053A" alt="">

<area shape="rect" coords="370,523 , 409,549" href="/cgi-bin/IMap.asp?map=5053B" alt="">

<area shape="rect" coords="409,523 , 447,549" href="/cgi-bin/IMap.asp?map=5153A" alt="">

<area shape="rect" coords="447,522 , 488,549" href="/cgi-bin/IMap.asp?map=5153B" alt="">

<area shape="rect" coords="488,523 , 526,549" href="/cgi-bin/IMap.asp?map=5253A" alt="">

<area shape="rect" coords="526,523 , 566,549" href="/cgi-bin/IMap.asp?map=5253B" alt="">

<area shape="rect" coords="565,522 , 605,549" href="/cgi-bin/IMap.asp?map=5353A" alt="">

<area shape="rect" coords="605,523 , 644,549" href="/cgi-bin/IMap.asp?map=5353B" alt="">

<area shape="rect" coords="643,522 , 683,549" href="/cgi-bin/IMap.asp?map=5453A" alt="">

<area shape="rect" coords="682,522 , 723,549" href="/cgi-bin/IMap.asp?map=5453B" alt="">

<area shape="rect" coords="723,522 , 761,549" href="/cgi-bin/IMap.asp?map=5553A" alt="">

<area shape="rect" coords="761,523 , 800,549" href="/cgi-bin/IMap.asp?map=5553B" alt="">

<area shape="rect" coords="800,523 , 839,549" href="/cgi-bin/IMap.asp?map=5653A" alt="">

<area shape="rect" coords="839,523 , 878,549" href="/cgi-bin/IMap.asp?map=5653B" alt="">

<area shape="rect" coords="878,522 , 918,549" href="/cgi-bin/IMap.asp?map=5753A" alt="">

<area shape="rect" coords="251,548 , 291,575" href="/cgi-bin/IMap.asp?map=4953C" alt="">

<area shape="rect" coords="290,547 , 330,574" href="/cgi-bin/IMap.asp?map=4953D" alt="">

<area shape="rect" coords="329,548 , 369,574" href="/cgi-bin/IMap.asp?map=5053C" alt="">

<area shape="rect" coords="369,548 , 408,575" href="/cgi-bin/IMap.asp?map=5053D" alt="">

<area shape="rect" coords="409,548 , 448,575" href="/cgi-bin/IMap.asp?map=5153C" alt="">

<area shape="rect" coords="448,547 , 488,574" href="/cgi-bin/IMap.asp?map=5153D" alt="">

<area shape="rect" coords="488,547 , 526,575" href="/cgi-bin/IMap.asp?map=5253C" alt="">

<area shape="rect" coords="526,548 , 566,575" href="/cgi-bin/IMap.asp?map=5253D" alt="">

<area shape="rect" coords="566,547 , 605,575" href="/cgi-bin/IMap.asp?map=5353C" alt="">

<area shape="rect" coords="605,547 , 644,575" href="/cgi-bin/IMap.asp?map=5353D" alt="">

<area shape="rect" coords="644,547 , 682,575" href="/cgi-bin/IMap.asp?map=5453C" alt="">

<area shape="rect" coords="682,547 , 722,575" href="/cgi-bin/IMap.asp?map=5453D" alt="">

<area shape="rect" coords="723,547 , 762,575" href="/cgi-bin/IMap.asp?map=5553C" alt="">

<area shape="rect" coords="762,548 , 801,574" href="/cgi-bin/IMap.asp?map=5553D" alt="">

<area shape="rect" coords="801,547 , 840,575" href="/cgi-bin/IMap.asp?map=5653C" alt="">

<area shape="rect" coords="840,547 , 879,575" href="/cgi-bin/IMap.asp?map=5653D" alt="">

<area shape="rect" coords="252,574 , 291,599" href="/cgi-bin/IMap.asp?map=4952A" alt="">

<area shape="rect" coords="291,573 , 330,600" href="/cgi-bin/IMap.asp?map=4952B" alt="">

<area shape="rect" coords="330,574 , 370,600" href="/cgi-bin/IMap.asp?map=5052A" alt="">

<area shape="rect" coords="369,573 , 409,600" href="/cgi-bin/IMap.asp?map=5052B" alt="">

<area shape="rect" coords="409,574 , 448,600" href="/cgi-bin/IMap.asp?map=5152A" alt="">

<area shape="rect" coords="449,574 , 488,599" href="/cgi-bin/IMap.asp?map=5152B" alt="">

<area shape="rect" coords="488,573 , 526,600" href="/cgi-bin/IMap.asp?map=5252A" alt="">

<area shape="rect" coords="526,574 , 566,600" href="/cgi-bin/IMap.asp?map=5252B" alt="">

<area shape="rect" coords="566,574 , 605,600" href="/cgi-bin/IMap.asp?map=5352A" alt="">

<area shape="rect" coords="604,573 , 643,599" href="/cgi-bin/IMap.asp?map=5352B" alt="">

<area shape="rect" coords="643,574 , 683,599" href="/cgi-bin/IMap.asp?map=5452A" alt="">

<area shape="rect" coords="682,574 , 723,599" href="/cgi-bin/IMap.asp?map=5452B" alt="">

<area shape="rect" coords="723,574 , 762,600" href="/cgi-bin/IMap.asp?map=5552A" alt="">

<area shape="rect" coords="762,573 , 801,600" href="/cgi-bin/IMap.asp?map=5552B" alt="">

<area shape="rect" coords="800,574 , 839,599" href="/cgi-bin/IMap.asp?map=5652A" alt="">

<area shape="rect" coords="839,573 , 878,599" href="/cgi-bin/IMap.asp?map=5652B" alt="">

<area shape="rect" coords="252,599 , 291,625" href="/cgi-bin/IMap.asp?map=4952C" alt="">

<area shape="rect" coords="290,599 , 329,625" href="/cgi-bin/IMap.asp?map=4952D" alt="">

<area shape="rect" coords="330,599 , 370,625" href="/cgi-bin/IMap.asp?map=5052C" alt="">

<area shape="rect" coords="369,599 , 408,626" href="/cgi-bin/IMap.asp?map=5052D" alt="">

<area shape="rect" coords="409,599 , 448,625" href="/cgi-bin/IMap.asp?map=5152C" alt="">

<area shape="rect" coords="447,598 , 488,626" href="/cgi-bin/IMap.asp?map=5152D" alt="">

<area shape="rect" coords="488,598 , 526,626" href="/cgi-bin/IMap.asp?map=5252C" alt="">

<area shape="rect" coords="526,598 , 566,626" href="/cgi-bin/IMap.asp?map=5252D" alt="">

<area shape="rect" coords="566,598 , 605,626" href="/cgi-bin/IMap.asp?map=5352C" alt="">

<area shape="rect" coords="604,598 , 644,626" href="/cgi-bin/IMap.asp?map=5352D" alt="">

<area shape="rect" coords="644,598 , 683,626" href="/cgi-bin/IMap.asp?map=5452C" alt="">

<area shape="rect" coords="682,598 , 723,625" href="/cgi-bin/IMap.asp?map=5452D" alt="">

<area shape="rect" coords="723,599 , 762,625" href="/cgi-bin/IMap.asp?map=5552C" alt="">

<area shape="rect" coords="761,599 , 800,625" href="/cgi-bin/IMap.asp?map=5552D" alt="">

<area shape="rect" coords="801,599 , 839,625" href="/cgi-bin/IMap.asp?map=5652C" alt="">

<area shape="rect" coords="839,598 , 878,626" href="/cgi-bin/IMap.asp?map=5652D" alt="">

<area shape="rect" coords="330,624 , 370,652" href="/cgi-bin/IMap.asp?map=5051A" alt="">

<area shape="rect" coords="369,624 , 409,651" href="/cgi-bin/IMap.asp?map=5051B" alt="">

<area shape="rect" coords="409,625 , 448,652" href="/cgi-bin/IMap.asp?map=5151A" alt="">

<area shape="rect" coords="448,625 , 488,652" href="/cgi-bin/IMap.asp?map=5151B" alt="">

<area shape="rect" coords="487,625 , 526,652" href="/cgi-bin/IMap.asp?map=5251A" alt="">

<area shape="rect" coords="526,624 , 565,652" href="/cgi-bin/IMap.asp?map=5251B" alt="">

<area shape="rect" coords="565,625 , 605,652" href="/cgi-bin/IMap.asp?map=5351A" alt="">

<area shape="rect" coords="605,625 , 643,651" href="/cgi-bin/IMap.asp?map=5351B" alt="">

<area shape="rect" coords="643,625 , 682,650" href="/cgi-bin/IMap.asp?map=5451A" alt="">

<area shape="rect" coords="683,625 , 723,652" href="/cgi-bin/IMap.asp?map=5451B" alt="">

<area shape="rect" coords="723,624 , 761,652" href="/cgi-bin/IMap.asp?map=5551A" alt="">

<area shape="rect" coords="762,624 , 801,652" href="/cgi-bin/IMap.asp?map=5551B" alt="">

<area shape="rect" coords="800,624 , 840,651" href="/cgi-bin/IMap.asp?map=5651A" alt="">

<area shape="rect" coords="839,625 , 878,652" href="/cgi-bin/IMap.asp?map=5651B" alt="">

<area shape="rect" coords="369,650 , 409,677" href="/cgi-bin/IMap.asp?map=5051D" alt="">

<area shape="rect" coords="409,650 , 448,678" href="/cgi-bin/IMap.asp?map=5151C" alt="">

<area shape="rect" coords="448,651 , 488,678" href="/cgi-bin/IMap.asp?map=5151D" alt="">

<area shape="rect" coords="488,651 , 525,677" href="/cgi-bin/IMap.asp?map=5251C" alt="">

<area shape="rect" coords="526,651 , 566,678" href="/cgi-bin/IMap.asp?map=5251D" alt="">

<area shape="rect" coords="566,651 , 605,677" href="/cgi-bin/IMap.asp?map=5351C" alt="">

<area shape="rect" coords="605,650 , 644,678" href="/cgi-bin/IMap.asp?map=5351D" alt="">

<area shape="rect" coords="644,650 , 683,677" href="/cgi-bin/IMap.asp?map=5451C" alt="">

<area shape="rect" coords="682,652 , 722,677" href="/cgi-bin/IMap.asp?map=5451D" alt="">

<area shape="rect" coords="722,651 , 761,677" href="/cgi-bin/IMap.asp?map=5551C" alt="">

<area shape="rect" coords="762,651 , 801,678" href="/cgi-bin/IMap.asp?map=5551D" alt="">

<area shape="rect" coords="800,651 , 840,678" href="/cgi-bin/IMap.asp?map=5651C" alt="">

<area shape="rect" coords="840,651 , 878,678" href="/cgi-bin/IMap.asp?map=5651D" alt="">

<area shape="rect" coords="408,677 , 448,703" href="/cgi-bin/IMap.asp?map=5150A" alt="">

<area shape="rect" coords="447,677 , 488,703" href="/cgi-bin/IMap.asp?map=5150B" alt="">

<area shape="rect" coords="488,676 , 526,703" href="/cgi-bin/IMap.asp?map=5250A" alt="">

<area shape="rect" coords="525,676 , 566,703" href="/cgi-bin/IMap.asp?map=5250B" alt="">

<area shape="rect" coords="566,677 , 604,703" href="/cgi-bin/IMap.asp?map=5350A" alt="">

<area shape="rect" coords="605,677 , 644,703" href="/cgi-bin/IMap.asp?map=5350D" alt="">

<area shape="rect" coords="643,676 , 682,702" href="/cgi-bin/IMap.asp?map=5450A" alt="">

<area shape="rect" coords="683,676 , 723,703" href="/cgi-bin/IMap.asp?map=5450B" alt="">

<area shape="rect" coords="723,676 , 762,703" href="/cgi-bin/IMap.asp?map=5550A" alt="">

<area shape="rect" coords="762,676 , 801,703" href="/cgi-bin/IMap.asp?map=5550B" alt="">

<area shape="rect" coords="801,676 , 840,703" href="/cgi-bin/IMap.asp?map=5650A" alt="">

<area shape="rect" coords="840,677 , 879,703" href="/cgi-bin/IMap.asp?map=5650B" alt="">

<area shape="rect" coords="487,702 , 525,728" href="/cgi-bin/IMap.asp?map=5250C" alt="">

<area shape="rect" coords="525,702 , 565,728" href="/cgi-bin/IMap.asp?map=5250D" alt="">

<area shape="rect" coords="565,702 , 604,729" href="/cgi-bin/IMap.asp?map=5350C" alt="">

<area shape="rect" coords="603,701 , 643,729" href="/cgi-bin/IMap.asp?map=5350D" alt="">

<area shape="rect" coords="643,702 , 682,729" href="/cgi-bin/IMap.asp?map=5450C" alt="">

<area shape="rect" coords="682,701 , 722,729" href="/cgi-bin/IMap.asp?map=5450D" alt="">

<area shape="rect" coords="525,728 , 565,755" href="/cgi-bin/IMap.asp?map=5249B" alt="">

</map> '''from bs4 import BeautifulSoup

soup = BeautifulSoup(iMap, 'html.parser')

facet_maps = soup.find_all("area")

facet_number = [ f['href'].split('=')[-1] for f in facet_maps ]

detailed_map = [ 'https://public.hcad.org/iMaps/Tiles/Color/' + f['href'].split('=')[-1] + str(a) + '.pdf' for f in facet_maps for a in range(1, 13) ]

appr_dist = 'Houston'

appr_dist_link = 'https://public.hcad.org/maps/Houston.asp'

df = pd.DataFrame([])

# df['Facet Number'] = facet_number

df['Detailed map'] = detailed_map

df['Appraisal Districts'] = appr_dist

df['Link'] = appr_dist_link