This is a short Python Web Scraping Tutorial

We will learn how to scrape data from this website StaticGen and have the data saved into a CSV file for further processing.

Let's get our hands dirty...

Prerequisite

You should already have at least some basic knowledge of the following:-

1- HTML and CSS

2- Python

Inspecting the site's HTML structure

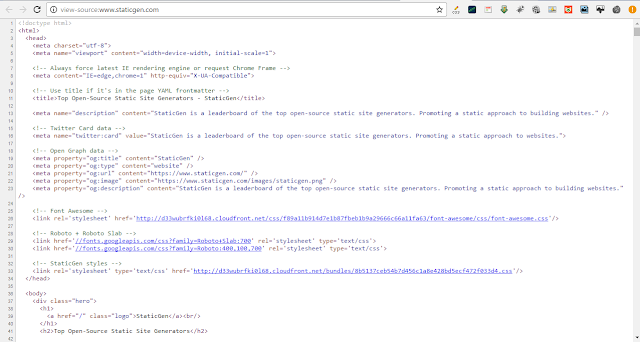

Load the website on the browser and study the html structure. Use what ever tool or browser for this, I used Google Chrome browser and it looks like below:-

Ctrl+U to view source code

FireBug to view html code

Html Inspector (Ctrl+Shift+I)

As you may have noticed, the required dataset to be scrapped are arranged in rows and columns on the web page. This makes things easier since we have a consistent pattern to follow.

Now, there are many libraries in python that you can use to scrape and clean dataset from such website. Some of the libraries include: Requests, Beautifulsoup, Selenium, Pandas etc

Each of the libraries have slightly different approach to extracting dataset from a web page. For example, if you are to use Selenium library, you will have to download and configure the Selenium web browser driver you are using.

In most cases, you will use a combination of different libraries to fully complete a task. In this tutorial, am going to use the combination of Selenium and Pandas to complete the scrapping task.

Web Scraping with Python Selunium and Pandas

You can install Selenium and Pandas on your python environment simply by running: pip install <packageName>More details about this and more can be found on their respective official websites:

Selenium = http://www.seleniumhq.org

Pandas = http://pandas.pydata.org

Now, I will assume you have installed and configured the above modules. So let's start scrapping...

looking closely, you will see that the xPath changes incrementally. Following the pattern, I created a text file listing all the xPaths while skipping the odd (4th) xPath. This text file is now read line by line and parse into find_element_by_xpath() method.

This may not be the most effective approach, but it gets the job done.

Here is the code:-

from selenium import webdriver

import pandas as pd

# Creates an instance driver object...

driver = webdriver.Chrome('C:\\Users\\user\\Documents\\Jupyter_Notebooks\\chromedriver_win32\\chromedriver.exe')

url = 'https://www.staticgen.com/'

xp = pd.read_csv('xpaths.txt', header=None)

# Get the url

driver.get(url)

# ====================================

ele_list = []

i = 0

for x in xp[0]:

# Find and get elements

ele = driver.find_element_by_xpath(xp[0][i])

ele_list.append(ele)

i = 1+i

# ====================================

lang_text_list = []

for lang in ele_list:

lang_text = lang.text

lang_text_list.append(lang_text)

lang_text_df = pd.DataFrame(lang_text_list)

lang_text_df.to_csv("siteGen.csv")

Alternative code using: Requests and BeautifulSoup libraries

# Import the libaries import time import requests import pandas as pd from bs4 import BeautifulSoup url = "http://www.staticgen.com/" raw_html = requests.get(url) # Get the text txt = raw_html.text # Get the content content = raw_html.content # make the content more clearner soup = BeautifulSoup(content, 'html.parser') # print (soup.prettify()) # find all 'li' tags with the class all_li = soup.find_all('li', {"class":"project"}) # test the length # len(all_li) # Clean just one/individual object all_li[0].find('h4', {'class':"title"}).text # ================================= # Lets iterate over the all_li object items_title = [] items_url_title = [] for item in all_li: try: title = item.find('h4', {'class':"title"}).text # Tiles url_title = item.find('h6', {"class":"url"}).text # URLs # print (item.find('dl')) # Language items_title.append(title) items_url_title.append(url_title) except AttributeError: continue # print (item.find('h4', {'class':"title"}).text)# =================================df_title = pd.DataFrame(items_title) df_url = pd.DataFrame(items_url_title) df_title[1] = df_url# =================================df_title[2] = lang_text_df df_title.to_csv("siteGen.csv")

Thank you for following.

No comments:

Post a Comment