Data wrangling (aka data munging or data preprocessing) is the process of transforms “raw” data into a more suitable form ready for analysis. It is a vital skill in completing many data science project.

Some data wrangling methods we will use in this tutorial are: filtering, gathering/collecting, merging, grouping, concatenating, transforming, manipulating, extracting, formatting, chaining, subset, handling missing data, summarizing, combining, splitting etc. Depending on your data wrangling project objective, you will only utilize a handful of these methods per project.

Here below, you will find some data wrangling Exercises and Solutions. Lets get our hands dirty....

The dataset: All the data used are available for download from the github page.

Exercise 1:

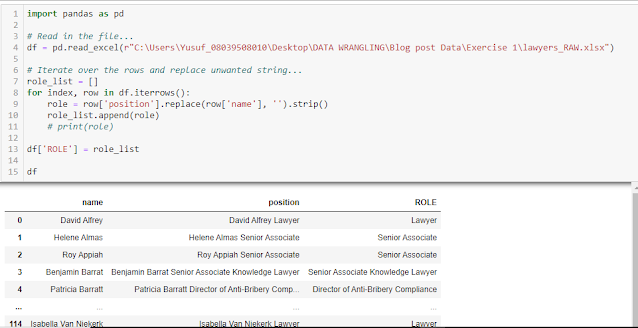

Extract the "positions" of the following list of lawyers table into a new column.

Solution 1:-

We will read the file into dataframe and then replace the names from column 'name' by empty string in column 'position'.

import pandas as pd

# Read in the file...

df = pd.read_excel(r"C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 1\lawyers_RAW.xlsx")

# Iterate over the rows and replace unwanted string...

role_list = []

for index, row in df.iterrows():

role = row['position'].replace(row['name'], '').strip()

role_list.append(role)

# print(role)

df['ROLE'] = role_list

df

Exercise 2:

Transform the tables on following web pages to a specific JSON in the given format as follow.

Table URL: https://sis.ou.edu/ted/home/byOther?stat_code=TX&sbgi_code=UTX031&trns_subj_code=&trns_subj_crse=

Name on table = JSON Attribute

---------------------------------

Name of School = from_school

Transfer Subject = from_course_department

Transfer Course = from_course_code

Transfer Title = from_course_name

Transfer Hours = from_course_credit_hours

OU Subject = to_course_department

OU Course = to_course_code

OU Title = to_course_name

OU Credits = to_course_credit_hours

Note that 'Name of School = from_school' is always indicated above the table...

Expected output result would look like this:-

Solution 2:-

Lets simply read the table into pandas dataframe using the read_html() method. Then we can either rename the columns to the new names or re-generate the columns based on the new column names while we drop/delete the unwanted columns.

import json

import pandas as pd

url = 'https://sis.ou.edu/ted/home/byOther?stat_code=TX&sbgi_code=UTX031&trns_subj_code=&trns_subj_crse='

table_UNi = pd.read_html(url)

# By re-generating the columns...

table_UNi[0]['from_school'] = 'Woodcrest College' # Obtained from table top

table_UNi[0]['from_course_department'] = table_UNi[0]['TransferSubject']

table_UNi[0]['from_course_code'] = table_UNi[0]['TransferCourse']

table_UNi[0]['from_course_name'] = table_UNi[0]['Transfer Title']

table_UNi[0]['from_course_credit_hours'] = table_UNi[0]['TransferHours']

table_UNi[0]["to_course_department"] = table_UNi[0]["OU Subject"]

table_UNi[0]["to_course_code"] = table_UNi[0]["OU Course"]

table_UNi[0]["to_course_name"] = table_UNi[0]["OU Title"]

table_UNi[0]["to_course_credit_hours"] = table_UNi[0]["OUCredits"]

table_UNi[0]["to_school"] = "University of Oklahoma" # This is CONSTANT

# Now we have the old and new columns in the df 'table_UNi[0]'...

# We can now drop/delete the old columns...

## ------ Alternatively: we could rename the needed columns and drop the others ------------

# By renaming the columns...

# new_cols = {'TransferSubject' : 'from_course_department', 'TransferCourse' : 'from_course_code',

# 'Transfer Title' : 'from_course_name', 'TransferHours' : 'from_course_credit_hours',

# 'OU Subject' : 'to_course_department', 'OU Course' : 'to_course_code', 'OU Title' : 'to_course_name',

# 'OUCredits' : 'to_course_credit_hours'}

# table_UNi[0].rename(columns = new_cols, inplace = True)

## -------------------------------------------------------------------------------------------------

final_df = table_UNi[0][['from_school', 'from_course_department', 'from_course_code', 'from_course_name', 'from_course_credit_hours',

'to_course_department', 'to_course_code', 'to_course_name', 'to_course_credit_hours', 'to_school']]

# Convert df to dict...

dict_1 = final_df.to_dict(orient='records')

# Convert dict to json...

university_json = json.dumps(dict_1)

print(university_json)Exercise 3:

Extract "spoken languages" from string into separate columns.

The data looks like this:-

If it contains string like this "Speaks Language1, Language2 and Language3" as in above, then languages (Language1, Language2 and Language3) be extracted into separate individual columns.

Solution 3:-

A quick look at the text show each row/detail starts on a new line (that is it ends with the newline character '\n'). With this, we can check if the 'Speaks' string exist and then split by '\n'

import pandas as pd

df = pd.read_excel(r"C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 3\ex3_RAW.xlsx")

def speak(aStr):

if 'Speaks' in aStr:

return aStr.split('Speaks')[1].split('\n')[0].strip()

else:

return ''

df['Speak'] = df['Language'].apply(lambda x:speak(x))

df# Remove unwanted string: ' and ' by doing a replace()

df['Speak'] = df['Speak'].apply(lambda x:x.replace(' and ', ', '))

# Convert 'String' column to list...

df_2= df.assign(Speak = df.Speak.str.split(","))

# Separating a 'List' column in individual columns/cells

df_3 = df_2['Speak'].apply(pd.Series)

# Concatenate df_2 to df_3

df_4 = pd.concat([df_2, df_3], axis=1)

df_4Exercise 4:

Split the SquirePattonBoggs data into separate files based on the profile url categories (https://www.squirepattonboggs.com/en/professionals/a/, https://www.squirepattonboggs.com/en/professionals/b/, https://www.squirepattonboggs.com/en/professionals/c/, etc).

Solution 4:-

df = pd.read_excel(r"C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 4\ex4_RAW.xlsx")

# Since we understood the url pattern, lets create a helper column to get unique records...

df['Profile_Letters'] = df['Profile'].apply(lambda x : x.replace('https://www.squirepattonboggs.com/en/professionals/', '').split('/')[0])

# Now we can get unique records and group them...

unique_group_item = df.Profile_Letters.unique()

print(unique_group_item)

# Group based on the 'Profile_Letters'...

groupby_letters = df.groupby('Profile_Letters')

groupby_letters.first() # First entry in each group...# Get all group entries and save to file...

for g in unique_group_item:

temp_df = groupby_letters.get_group(g)

temp_df.to_excel(f'ex4_RESULT//{g}.xlsx', index=False)

print('Done...')The final output would look like this... notice that there is a group for 'š' character :).

Exercise 5:

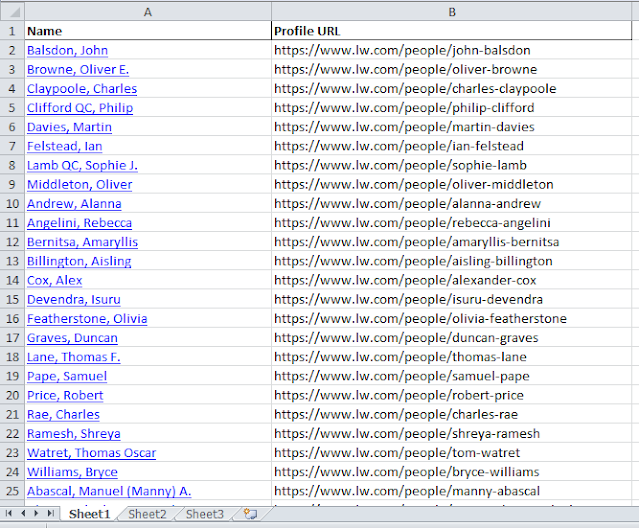

Extract the hyperlinks to fill 'Profile URL' column on the given excel file.

Solution 5:-

For this kind of data wrangling task, I couldn't find a way to do it with pandas. So, I looked elsewhere and found that openpyxl module (which you can install using pip) is capable for this kind of task.

import openpyxl

# Read or Load the excel file...

work_book = openpyxl.load_workbook(r"C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 5\ex5_RAW.xlsx")

# Read the sheet to use by name or active...

work_sheet = work_book['Sheet1']

# work_sheet = work_book.active

# Access sheet cells...

# This will get the value on: 6th row, 1st column...

print(work_sheet['A6'].value)

print(work_sheet.cell(row=6, column=1).value)

# This will get the hyperlink on: 6th row, 1st column...

print(work_sheet['A6'].hyperlink.target)

print(work_sheet.cell(row=6, column=1).hyperlink.target)

# Alternatively, access entire sheet column...

print(work_sheet['A'][5].value)

print(work_sheet['A'][5].hyperlink.target)

# Loop over column and get the text and hyperlink...

cell_value = []

cell_link = []

for cell in work_sheet['A']:

cell_value.append(cell.value)

try:

cell_link.append(cell.hyperlink.target)

except AttributeError:

cell_link.append(None)

print('Done...')

# At this point, we got what we wanted in these lists: cell_value and cell_link

# We can write it to file using openpyxl or pandas or anything else!Bonus solution

# using openpyxl

i = 1

for value, link in zip(cell_value, cell_link):

work_sheet['C' + str(i)] = value

work_sheet['D' + str(i)] = link

i += 1

# Save the file...

work_book.save(filename = r"C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 5\ex5_RESULT.xlsx")

print('Five saved...')# using pandas

df = pd.DataFrame()

df['Name'] = cell_value

df['Profile URL'] = cell_link

# To get rid of the unwanted first row, we can drop the row Or delete first element from the list

# Save the file...

df.to_excel('ex5_RESULT.xlsx', index=False)

print('Five saved...')Exercise 6:

Create folder for each entry in the country column and save its corresponding country table within the folder.

df = pd.read_excel(r"C:\Users\Yusuf_08039508010\Desktop\ex6_RAW.xlsx")

# Get unique country records and group them...

unique_country = df.Country.unique() # To be used later in creating folders

print(unique_country)

# Groupby Country

groupby_country = df.groupby('Country')

# Get all group entries and save to file...

for g in unique_country:

temp_df = groupby_country.get_group(g)

temp_df.to_excel(f'ex6_RESULT//{g}.xlsx', index=False)

print('Done...')import glob

import shutil

unique_country = df.Country.unique()

country_file = glob.glob('ex6_RESULT/*.xlsx')

# Note that the length of 'unique_country' and 'country_file' are thesame...

# Create empty folders for the countries...

for country in unique_country:

print('Creating folder for...', country)

os.mkdir(f'Data_folder/{country}')

# Move/Copy flags to respective folders...

for file in country_file:

# Construct the destination_folder...

destination_folder = 'Data_folder/' + file.split('\\')[-1].split('.')[0]

# Copy file to folder...

print('Copying or moving...', file)

shutil.copy(file, destination_folder) # Copy

# shutil.move(flg, destination_folder) # Move

print('Done....')Exercise 7:

Group these country flags into their respective starting alphabet sub directory.

Solution 7:-

The solution will look like this...

import os

import glob

import shutil

flags = glob.glob(r'C:\Users\Yusuf_08039508010\Desktop\DATA WRANGLING\Blog post Data\Exercise 7\flags_RAW\*.png')

len(flags)

# Get the first character of each flag name...

first_char = set([x.split('\\')[-1][0] for x in flags])

# Create empty folders for the characters...

for f in first_char:

print('Creating folder for...', f)

os.mkdir(f'Alphabetical_folder/{f}')

# Move/Copy flags to respective folders...

for flg in flags:

# Construct the destination_folder...

destination_folder = 'Alphabetical_folder/' + flg.split('\\')[-1][0]

# Copy flag to folder...

print('Creating folder for...', flg)

shutil.copy(flg, destination_folder) # Copy

# shutil.move(flg, destination_folder) # Move

print('Done...') Now if you open a sub-folder only the flags that start with the sub-folder character will be present in the folder. For example, in sub-folder "P" only country flags names starting with 'P' will be found there as seen below:-

Take Home Exercise

Q1) Reverse the process above to copy the flags from all sub-folders into one folder.

Q2) Group the country flags into their respective continents sub directory. That is, you will group them into seven folders named: Asia, Africa, North America, South America, Antarctica, Europe, and Australia

Exercise 8:

Reversing the order of this table by rows and columns.

Solution 8:-

This is quite a simple exercise to complete. All we have to do is to rearrange the order of the table as follow:-

a) by rows means from bottom to top

That is the last row will be the first row. In this case, the ranking rows will now be from 100, 99, 98, 97, to 1 instead of from 1, 2, 3, 4 to 100.

# Reversing the order of ROWS

df[::-1].reset_index(drop=True)

# Or

df.iloc[::-1]b) by column means from right to left

That is the columns will be rearranged from "Ranking, University, University Link, Details, Country, Impact Rank, Openness Rank, Excellence Rank" to "Excellence Rank, Openness Rank, Impact Rank, Country, Details, University Link, University, Ranking".

# Reversing the order of COLUMNS

df[df.columns[::-1]]

# Or

df.iloc[:, ::-1]Exercise 9:

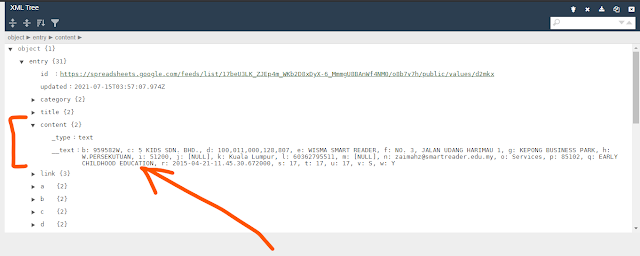

Extract company data from XML pages. See sample below:-

Solution 9:-

If we view the XML tree, it has one root element named "entry" with 31 sub-elements. The element that contains the data we wanted is the fifth sub-element named "content".

import re

import requests

import pandas as pd

import xml.etree.ElementTree as ET

xml_list = ['https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cokwr', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cpzh4', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cre1l', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/chk2m', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/ciyn3', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/ckd7g', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/clrrx', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cyevm', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cztg3', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/d180g', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/d2mkx', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cssly', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cu76f', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cvlqs', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/cx0b9', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/d9ney', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/db1zf', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/dcgjs', 'https://spreadsheets.google.com/feeds/list/17beU3LK_ZJEp4m_WKb2D8xDyX-6_MmmgU8BAnWf4NM0/o8b7v7h/public/values/ddv49']

data_list = []

for url in xml_list:

print('Processing...', url)

# Read XML from URL...

xml_response = requests.get(url, timeout=5)

my_xml_root = ET.fromstring(xml_response.text)

# ------------------------

# Get all children...

all_children = list(my_xml_root)

# the items we wanted are in the fifth child...

all_children[4].tag

all_children[4].attrib

content_text = all_children[4].text

content_text_list = content_text.split(', ')

# Template: re.sub(regex_pattern, new_str, original_str)

content = [ re.sub(r'[a-z]: ', '', x_string) for x_string in content_text_list ]

data_list.append(content)

# break

print('Finished....')

# Generate the df...

data_list_df = pd.DataFrame(data_list)

data_list_dfMore exercises on XML parsing are available on this Jupyter Notebook.

Exercise 10:

Read this table on Expire Domain names into a pandas dataframe.

Solution 10:-

import requests

import pandas as pd

from bs4 import BeautifulSoup

url = 'https://www.expireddomains.net/expired-domains' #/?start=300#listing'

# Use requests to read page html...

res = requests.get(url)

html_data = res.text

# Use Bs4 to parse the html...

soup = BeautifulSoup(html_data, 'html.parser')

table = soup.find_all('table', {'class':'base1'})

table_df = pd.read_html(str(table[0]))

table_df[0]Conclusion

There are a lot more data wrangling tasks than the once we have done above. Hopefully you got something new to help you wrangle your data in your next project.

If you enjoyed this and would like to support me, then BuyMeACoffee.🍺

Best!

No comments:

Post a Comment